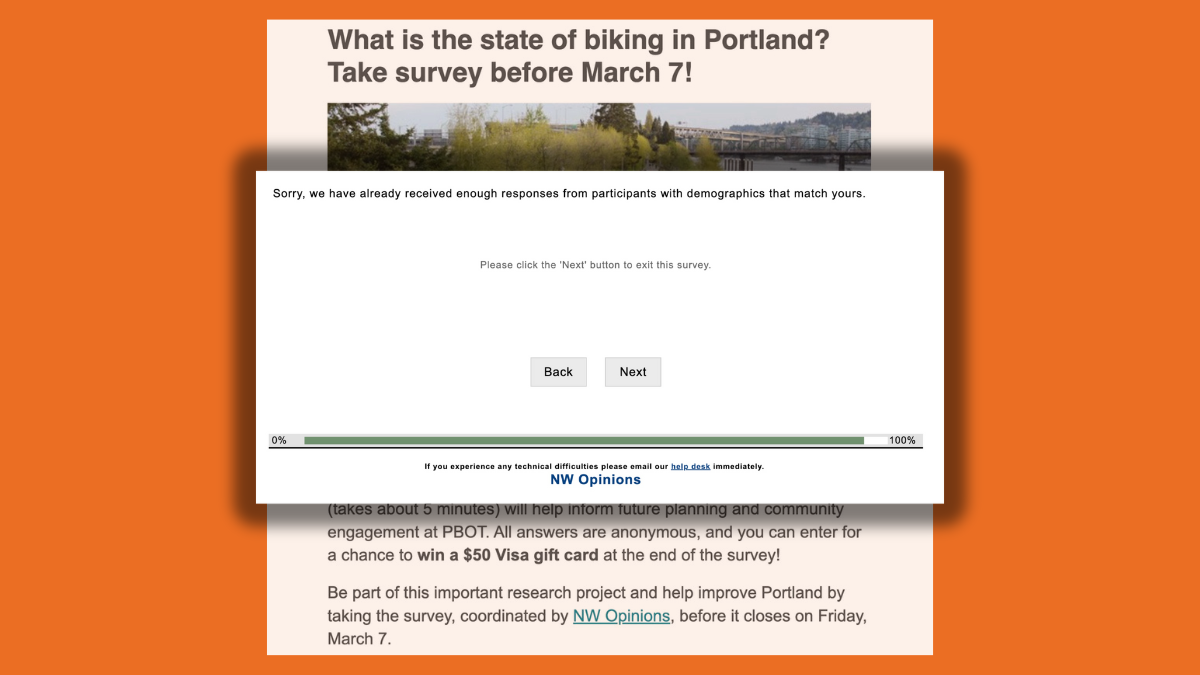

Usually when a government agency puts out a survey, they want as many responses as possible. That’s why my eyebrows raised a bit when I heard from someone who took a recent Portland Bureau of Transportation survey and told me they weren’t allowed to complete it.

The survey was sent out in a PBOT Safe Routes to School email on March 4th. “Portland is known as a bike-friendly city, but do Portlanders feel the same way?” the blurb with a link to the survey read. “PBOT wants to know what you think about bicycling, whether you currently bike or not.” Responses to the short survey would help inform future planning and community engagement and it was billed by PBOT as an “important research project”.

“I attempted to take the survey,” shared reader Matt S. in an email to BikePortland yesterday. “And after completing several pages of it, I was informed that, ‘Sorry, we’ve already received enough responses from participants with demographics that match yours’ and was kicked out of the survey.” Matt said he was “gobsmacked” at what he felt was “outrageous, egregious, anti-democratic conduct” by PBOT and its partner on the survey, NW Opinions.

I noted others who posted on the BikeLoud PDX Slack that they received the same message. “Apparently they’ve heard enough from young hispanic men in District 4,” wrote one person who got the same message as Matt. “District 3 filled up too,” and “I guess too many middle-aged cis women in District 4,” wrote other respondents who were also kicked out of the survey before being able to complete it.

When I posted about this on Instagram, one person seemed to support the tactic. “This is amazing!!! Great to see PBOT being more hardcore about better representation.”

To understand more about what was going on, I reached out to PBOT.

PBOT Communications Director Hannah Schafer said the survey’s goal is to assess Portlanders’ bicycling behavior and attitudes and the agency is, “specifically working to identify the conditions that would encourage people to bike more.” Schafer explained that the survey is being conducted on two tracks: the “scientific track” and the “civic engagement track”. Here’s more form Schafer:

“The scientific track proactively targets a sample population with representative demographics to help ensure that it is obtaining a representative sample of Portlanders. That track is tightly controlled by the pollsters and will also include statistical weighting at the back-end to ensure statistical representation. The civic engagement track – which is the survey people are taking and are concerned about – will help bolster the data that will be received from the scientific track.”

So why kick some respondents out? Schafer says the pollsters didn’t expect such a robust response and wanted to reach only people who don’t already consider themselves enthusiastic cyclists. “However, because the pollsters are seeing great interest in the survey and surmise that many of the people responding are both active and passionate about bicycling, they have since modified the survey to allow everybody to take it – even if it overloads certain demographics.”

“That feedback is likely to provide detailed information about the subgroup of Portlanders who identify as people who bike, rather than serve to directly augment data from the scientific track,” Schafer added. “Both approaches have value, and the high number of responses received on the civic engagement track indicates its utility is more in better understanding that sub-group.”

Schafer says people who were initially turned away should be able to take the survey now. “We are so grateful for the tremendous response.”

Take the survey here. It’s up until tomorrow (March 7th).

Thanks for reading.

BikePortland has served this community with independent community journalism since 2005. We rely on subscriptions from readers like you to survive. Your financial support is vital in keeping this valuable resource alive and well.

Please subscribe today to strengthen and expand our work.

If there were specific demographics being targeted those should have been the first questions on the survey to ensure that people met the qualifying criteria before spending time filling it out. The same problems might have existed but it would have been more clear because the survey would have ended after 1-3 questions that would have painted a clearer picture as to why they didn’t qualify.

That PBOT is paying a company that does this professionally and they weren’t competent enough to follow this kind of best practice is disappointing. I like that PBOT is commissioning surveys given that it allows them to demonstrate support for things they’re trying to do in concert with letters, testimony, etc. but this demonstrates that they might need to improve their RFP to prevent having to respond to these types of “own goals.”

And they would find that out by… asking you a bunch of questions. You have to do part of the survey before they can know if they need more data from you.

On the other hand, I didn’t see how much was already filled out by the people who were blocked. Presumedly if they already had enough data for people who answered the first couple questions a certain way, they would have been booted sooner.

I agree with with this statement and if the survey was designed to filter people out early this would be a stronger point. However, navigating through the survey, they begin with demographic questions that would typically disqualify people like where they live, education, and age – if those aren’t being used they should be at the end and if they are then the filtering should stop them before making it to the main questions. Based on this article it would appear that the main goal was to get a certain subset of people who bike, so that should have been the place to start and that way it would have ended well before demographics entered the picture.

It’s more curious that the email announcing this survey went out on the 4th and was only set to be open for four days. The only framing for the survey was to “help inform future planning and community engagement.” An alternative approach could have been to accept all surveys but only focus on the ones that met their criteria within the biking-related questions which would have had the benefit of avoiding this mess in the first place and organizing the questions in a better manner.

“Online survey” and “scientific track” should never be used together like that.

I hope PBOT is not torn between funding street maintenance and the folks making surveys like these where respondents are self-selected and outcome motivated.

Also, why would you expect someone who identifies as Hispanic to have different cycling preferences from someone who’s heritage is partially Chinese? I wouldn’t expect that.

I think it’s because it is self selected that they added the demographic limits or whatever. They’re trying to make a representative sample.

“I wouldn’t expect that.”

I didn’t see anything that suggested they thought that either. They said they heard enough from people who already cycle frequently.

You don’t get a representative sample with online surveys, no matter what you do. You get a self-selected sample, which is inherently non-representative, and if you prioritize early responders, it makes it less representative yet.

But I still don’t know how having too many (or too few) respondents with some Hispanic heritage would skew the sample results. What does that have to do with cycling?

(“Apparently they’ve heard enough from young hispanic men in District 4” was a quote from the story, so that’s why I’m using it as an example.)

You’re quoting what some respondents commented on Twitter or to Jonathan. Not what actually happened.

“Schafer says the pollsters didn’t expect such a robust response and wanted to reach only people who don’t already consider themselves enthusiastic cyclists.”

I don’t think it’s clear that they were blocking people based on race based on this, people are just jumping to conclusions. I’d like some clarification if that is true. I would think “young Hispanic men” would be among the less represented responses (along with the other rage-baity comments quoted) so it seems more likely this WASN’T what they were using, and instead something like “do you cycle every day?”

I disagree with your assessment about online or self selected polls. There is nothing magical about it being online that makes it impossible to control for various biases you might get. It’s not like going out and knocking doors is any less biased. There is nothing inherently unscientific about an online poll. Not to say if this was or wasn’t scientific, but the reasoning that it’s a bad poll because PBOT did it or it was online is fallacious.

The city of Portland government is obsessed with race….not cycling.

A couple times various people whose job it is to make sure local government complies with federal race-based standards of public outreach and participation have pointed out to me that the USA legally has 3 races – African-Americans (and specifically those who have ancestors who were slaves in the USA before 1860), through various pieces of legislation from the 1860s and 1950s-70s; American Indians from legislation and ‘treaties’ from the 1600s through the 1930s; and “Everyone Else”, as related to the US Constitution of 1787 and legislation afterwards. “White People” & “Caucasian” aren’t really legally-defined races like African-American and American Indian according to staff I talked with. A term like “African” gets really confusing fast when you start discussing North African Berbers, Arabs, Egyptians, and white South Africans, while “Asian” includes Chinese, Afghans, Iraqis, Israelis, Hindus, and Siberians – and so our federal government tries to avoid defining such people. It gets even nastier when you have a panel made up of people who might be as little as 1/64th American Indian (and 63/64th white) and yet still qualify as a “minority” member of a panel; same with African-Americans, but with the added caveat that a person born to a parent born in Africa might not qualify as an African-American.

To put it a nutshell, I have served on several panels and committees whereby our Asian-American, Latinx, and foreign-born black members were not considered “minorities” by our compliance officers, at least for reporting purposes.

It may be that PBOT is having to deal with these same federal rules and guidelines.

It shouldn’t be difficult to “weigh” survey responses according to sociodemographic variables so that if an oversampling of a certain demographic occurs, they can weight each individual response a tad bit lower in proportion to that demographic group. This is what pollsters do and it allows for a better overall survey, provided that hard-to-reach demographic responses aren’t weighted so high that they would over represent individuals than a group. FWIW, I filled out the survey yesterday and was able to complete it.

Personally, I would have gone with a weights-based approach like you describe. But there’s nothing inherently problematic about this approach.

Yes, the messaging was goofy. But statistically rigorous polls/surveys aren’t democratic voting exercises (and shouldn’t be). This is a difficult nuance to communicate to an innumerate/statistics-uninclined general population, but it’s real.

I believe the messaging sounds like “you’re not the right skin color, so we don’t need to know what you think”, and I believe that goes beyond goofy!

I also wonder why the heck you wouldn’t just collect the data (let *everyone* fill it out), then, after blinding the researchers to all data except demographic data, count only the representative sample?

Is that somehow too many researcher degrees of freedom?

This is pretty much exactly what JR & I and described with the “weights-based” approach Your order of operations are wrong, but you’re on the right track. So we pretty much agree on everything.

If I were doing it, I’d run the analysis several times, randomly sampling the over-represented demographics down to an appropriate size, and evaluate the sensitivity of that. But I’m not a pollster.

A good way to learn about how polls and statistically valid sampling works is to read about George Gallup (yes, that Gallup). He famously correctly predicted FDR’s election after collecting only ~10% of the data that his competitors did. The difference was that he closely watched the demographics of his sample. As a result, he was correct and the “more data is better crowd” embarrassed themselves by predicting a landslide for the wrong candidate.

Can you conduct a rigorous poll or survey online?

If you control/account for demographics in way that yields a statistically relevant population sample, yes.

How do you make sure you’re not just sampling the online set? And how do you generate a random selection of email addresses to mail the survey to (people were apparently invited to take the survey).

Phones are becoming a less reliable way to get a random selection from, but at least there you can dial random phone numbers. With email addresses, you need to start with a list of known addresses; given there is no central repository of Portland email addresses, how do you make that work?

If you’re emailing out the survey, you don’t need everyone’s email address. You only need enough email addresses. Collecting a scientifically representative sample typically involves far fewer respondents than most would guess. I’m not in the business of collecting email addresses, so I’m not sure how big the top of the proverbial funnel needs to be to get a representative sample.

But I can’t stress this enough: more data isn’t better than good data.

What if you only have the email addresses of people who testified on bicycle related items (contrived example for illustration purposes)?

If your email addresses are biased, you’ll never get an unbiased result from your survey.

But regardless, the questions they asked (as described elsewhere) make me think the whole exercize is not worth much, regardless of how biased/unbiased the results may be.

This looks terrible from a PR perspective. I’m not a pollster or a statistician, but I’m guessing there was a better way to do this.

I think someone could use a reminder of the Obama administration directive: “Don’t do stupid shit.”

Hi. I’ve deleted this comment and all the replies in the thread because it is totally off-topic and stuff like this just clutters the conversation and prevents other folks from talking about the issue at hand. I hope you understand. Thanks. – Jonathan Maus.

Kind of a non-sequitur, really.

Many of PBOT’s failures can be directly linked to how they have handled “public support” and opinions. There is no evidence that they have ever taken this approach seriously. This latest fumble is disappointing, but I would really like to understand what they are truly hoping to accomplish here, and if they are asking the right questions– not on the survey, but what is their ultimate goal.

It was still a terrible survey, didn’t ask any of the questions we’re actually struggling over, like do you think we need millions of dollars worth of flashing lights for kids to cross the street safely or is some of this car traffic and speed optionally inflicted on us by PBOT’s adherence to highway design standards, yellow centerlines, and lane widths that are inappropriate for streets which lack bike lanes or sidewalks despite their designation for those uses in our Transportation Systems Plan? Would you be willing to drive around the block sometimes or pay for parking to allow kids to safely bike to school and have a fiscally or environmentally sustainable transportation system?

PBOT needs to quit trying to fill the budgets for their wishful plans from 1999 where all drivers are infallible rule-followers who can’t cross a painted line and deal with the reality of induced demand vs a lack of safely connected low-stress bike network due to squandering resources on subsidizing free-for-all car (ab)use.

This is an excellent way to encourage your respondents to lie about their demographics.

They should apply sample weights. If the city is 30% POC but your survey respondents are only 5% POC, you weight those responses heavier than the other 95%. I’m guessing nobody on staff knows how to do that.

I assume this is common practice among those who believe their race will be used to deemphasize their opinions.

Typical government. Gotta control the message so if control it by not allowing anyone to fill out a survey then so be it.

It’s just like when the City brought out those statistics recently to tell us how the City isn’t doing so bad economically, crime, etc. I heard directly from one of the people who produces those numbers that they had to, and I quote, “massage the data so it’d look good” by selecting which numbers they would use and which ones they would conveniently leave out.

Yeah, trust in government! Right!

This is incredibly stupid. Having someone get all the way through a survey just to get that response is a slap in the face, and will lead to falsification and disengagement.

We have a $100 million+ budget hole this year. Start filling it with people like the ones responsible for this decision.

If pbot is trying to learn about casual and infrequent cyclists, is this survey really a good tool? Who’s taking the time to fill this out if they aren’t already a cycling enthusiast?

Probably not many, which is why they already collected enough data from the demographic of enthusiastic cyclists.

If there’s a quota, I’m surprised they let this GenX white cishet guy from district 2 finish the survey. There are so many of us.

I find it delicious that mostly white and economically comfortable people were excluded from a PBOT survey. I hope this becomes the norm for surveys and other communications. In a similar vein, the city should also exclude neighborhood associations that do not adequately reflect Portland demographics from providing testimony or feedback.

Yes, this is surely the best path forward. Thank you, Soren!

Abuse of power and identify politics are fine as long as we are the ones in control? Right?

Pretty much, yes. This hypocrisy pervades both the left and the right, and it’s one reason I can’t abide by either.

Anyone living or working in a neighborhood can join a neighborhood association, it’s not like they’re willfully excluding people.

“In a similar vein, the city should also exclude neighborhood associations that do not adequately reflect Portland demographics from providing testimony or feedback.”

Might want to think this one through. You’d be excluding those you probably want to elevate.

Be better.

In addition to the issues highlighted in the article, the questions it asks are very unspecific and likely unhelpful for any practical purpose. For example, it asks “Which best describes the amount of bicycling you are currently doing in your community?” with the possible responses: “more often than in the past,” “about the same amount as in the past” or “less often than in the past.” It doesn’t define past, so is that to mean the past month? Year? Five years? Ten years? My use has fluctuated up and down in the “past” based on my housing location, job location, kids’ activities, health/injuries, infrastructure changes, etc. Nor does it ask for any specifics like distance, frequency, etc.

It also asks “In the last 12 months, have you seen or heard any advertising or messaging encouraging Portlanders to bicycle more?” but doesn’t specify from whom. From the city? Bike shops? BikePortland?

I don’t know much about NW Opinions, but the way this survey was conducted doesn’t give me a ton of confidence that this is an effective use of taxpayer money.

Typically, the reason for discontinuing a survey after a quota has been met is to be respectful of the respondent’s time. If you only need a certain number of responses to reach the statistical significance that you’re looking for, then additional responses aren’t necessary and may be taking up quite a bit of the person‘s time. It’s meant as a kindness to people who fill out the survey.

That said, there is a difference in perception when the subject is public policy feedback and being unable to contribute feels like you aren’t being listened to. Any type of research should consider the environment in which people encounter it. Every communication is an important touch point, even “just research.”

Additionally, it’s best practice to front load these questions at the beginning of a survey if you are using quota logic, in the interest of not wasting people’s time. I’m not familiar with the particular questions in this survey, but sometimes a quota question requires more context, and it can be difficult to include at the very beginning and still make sense while getting valid responses. Each page further into the survey and you (owner of the survey) risk being seen as an asshole.

Source: I’ve conducted mixed methods user research for a long time.

This should be pinned to the top, IMO, and not let the knee jerk reactions flood the space.

This may have been a ham fisted implementation, but there isn’t anything inherently wrong with what they were doing.

It would have been very easy to weigh the responses by demographic and avoid this whole mess. You know this.

Usually a scientific poll or survey would try to get as many responses as possible, then use demographic information on the responders to compare to the general population and then use that to weight the responses. I’ve never heard of a system in which people were cut off from even taking a poll or survey based on demographic limits. Very strange. It’s also strange because I’ve heard that when the City of Portland conducts a survey, demographic information questions are always supposed to be at the end of the survey, are required to be optional, and can only be used to get a sense of who they are reaching, and are not supposed to be used in coding the survey, to avoid bias.