Uber has been testing its new self-driving cars on human subjects since last year and now it appears one of them has killed a person who was walking across a street. The collision happened in Tempe, Arizona late last night. According to a local news report, “Tempe Police says the vehicle was in autonomous mode at the time of the crash and a vehicle operator was also behind the wheel.”

This is the second self-driving Uber (that we know about) that has been involved in a collision. Last month a local news station in Pittsburgh reported that one of them slammed into another car while in self-driving mode.

After last night’s death, Uber has announced it will immediately end its testing in Tempe and Pittsburgh, as well as San Francisco and Toronto.

Thankfully in Portland our local leaders and transportation officials have not allowed a private company to test their deadly product on humans.

Back in April the Portland Bureau of Transportation launched their Smart Autonomous Vehicle Initiative (SAVI). In doing so Portland made it clear it welcomed innovative companies to try new autonomous vehicle (AV) products and services here; but only if safety was the number one priority.

In July, PBOT released a Request for Information aimed at companies like Uber. “If you want to test your technology, we are game to work with you,” PBOT staffer Ann Shikany proclaimed in a webinar with potential respondents, “But before we just start testing, we want to understand what’s out there.” It was PBOT’s attempt to, “Begin engagement with the private sector in a more intentional way.”

Advertisement

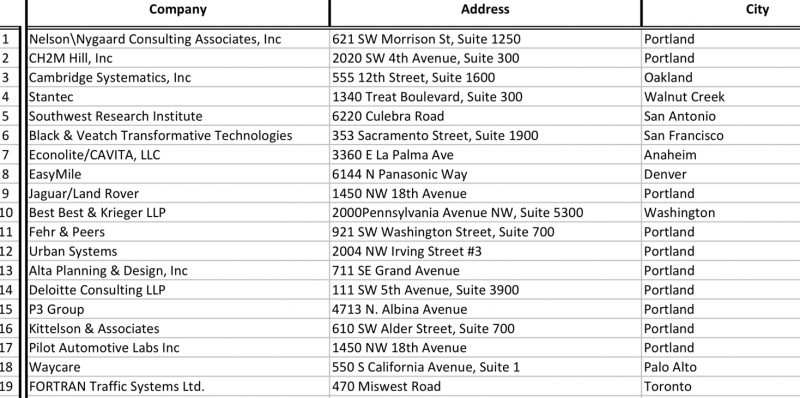

19 companies responded. It doesn’t look like Uber is on the list…

The RFI itself makes it clear that any companies who want to test AV tech must first prove it does not conflict with our Vision Zero Action Plan. They also told prospective companies that no AV testing permits would be accepted until all City of Portland regulations were completed.

Here’s one of six goals of the SAVI effort that relates to safety:

Ensure the safety of our residents and businesses by requiring AV providers to align with our Vision Zero goal to eliminate all traffic deaths and serious injuries by 2025. AVs must show that they can and will stop or avoid pedestrians, bicyclists, animals (to include domestic, game and livestock), disabled people, emergency vehicles, red lights, and stop signs.

PBOT Project Manager Peter Hurley emphasized during the webinar that if/when a company is selected to test here they must start in a “controlled situation” that’s not in the public right-of-way. Ann Shikany added, “We’re thinking it might be more of a closed track, obstacle course situation in an industrial area rather than a heavily pedestrian-trafficked residential street.” (Last night’s fatal collision in Tempe occurred in a suburban setting at an intersection of two large arterial roads.)

“We don’t want to be passive respondents to this technological change,” Hurley said at a bicycle advisory committee meeting last August, “We want to have a proactive role.”

So far PBOT has made a valiant effort to protect us from the dangers of irresponsible companies like Uber. We’re grateful for that.

For more on Portland’s Smart Autonomous Vehicle Initiative, check out the city’s website.

— Jonathan Maus: (503) 706-8804, @jonathan_maus on Twitter and jonathan@bikeportland.org

Never miss a story. Sign-up for the daily BP Headlines email.

BikePortland needs your support.

Thanks for reading.

BikePortland has served this community with independent community journalism since 2005. We rely on subscriptions from readers like you to survive. Your financial support is vital in keeping this valuable resource alive and well.

Please subscribe today to strengthen and expand our work.

Why not ban Uber like London?

It definitely feels qualitatively different to be killed by a robot rather than by an inattentive human driver, but this feels like a tricky question. If we assume that robot drivers will cut fatalities significantly, are we really best-served by a zero-tolerance policy? I mean, if we stick with 100% human drivers, the total traffic fatalities are almost certain to be higher than with autonomous cars, no?

I’ve been grappling with this too, from a slightly different angle. Since it seems like we getting to the stage where these robot cars are getting quite closer to being capable drivers, we need to test them out someplace. Probably best to go for a spot with consistent road design, lack of inclement weather, less vulnerable road users, etc. For better or worse, driver centric cities like Tuscon fit the bill (which I haven’t been to myself, but am guessing is close enough to the style of Phoenix which I am familiar with).

Basically, I wouldn’t want Division to be the first stomping ground of the robot cars, with the extremely high amount of somewhat random foot/bike traffic. Better a place with big giant long blocks and very obvious corner crosswalks.

And I gotta say it: “I for one welcome our robot overlords”.

i don’t welcome predatory companies whose goals are to “disrupt” public transportation.

If I knew they were programmed to recognize unmarked crosswalks, and to obey the new 20 mph speed limits, I’d feel a lot safer around them than many of the drivers cutting through my neighborhood.

I’m pretty sure that they are programmed to obey the speed limits. Part of the problem, at least in the Arizona incident, seems to be allowing 40mph in proximity to pedestrians.

If it can’t spot a pedestrian that has already traveled across two lanes of a three-lane road directly in front of it, it’s going too fast.

I’m not sure where you get your information but AVs are often programmed to not obey speed limits. Moreover, the uber vehicle that killed a human being was going 38 mph in a 35 mph zone where there is significant pedestrian traffic (technically not a crosswalk but an ambiguous crossing point).

Several of those facilities exist. One such testing ground is the decommissioned military facility in Concord, CA: http://gomentumstation.net.

Apparently too early to make that assumption.

We don’t yet know that the pedestrian in Tempe was crossing at an unmarked crosswalk.

reports state that they were struck outside of the crosswalk… the crosswalks are marked at this intersection…

This is a great question that a lot of people are asking at this moment. But, there are some underlying issues that should be considered.

First, it obscures the conversation to talk about AVs as if there is one possible AV to test. Of course, there are many AVs and the question is “how safe do they have to be to test on humans, and which AV/AV algorithm is safe enough to test on humans.”

Second, if AVs are an intervention that is intended to reduce mortality, how do they compare to other measures that are available? If we say that there are 40K traffic deaths/ year and reducing that number by 5K is worth 35K deaths by AV, have we honestly compared AVs to other means of death reduction? i.e. lower speed limits, eliminating dangerous car design, stricter licensing requirements, improving transportation that carries lower risk of death.

The reality is AVs are not being introduced to reduce deaths. They are being introduced to create wealth. As a society, we will have to decide how much AV death is tolerable, because AV companies will want the tolerated AV death number to be similar or a little less than what we currently tolerate so that they can compete for market share based on speed and convenience.

However, it is disingenuous to consider our current acceptance of traffic death, danger, pollution and dominance of public space to be the standard. What sucks is that we have this current standard because of a lack of thoughtful regulation of cars, and chances are that this regulation will be even weaker against AVs.

In exchange for private companies to gain control over some of our most vital public spaces, our roads, they should at least meet the standard of reducing deaths to near zero. It is possible, but it will mean more work and less profit for AV companies.

“if AVs are an intervention that is intended to reduce mortality” – that’s a big “if”! AVs are intended to offer commercial profit growth in many sectors ranging from private transportation to online services delivery (captive audience), and as we even see in this week’s roundup, alcohol sales. Driving is a responsibility that competes with the attention those profit opportunities demand. The theory that robot cars are safer than human drivers (though they may actually be) is what marketing would call a “value proposition.”

“and chances are that this regulation will be even weaker against AVs”

i have come to believe that (without very strong regulation) AV technology will likely lead to more victim-blaming and more restrictions placed on people walking/rolling.

if corporation and our captured-government assumes the AI tech does not make mistakes then it’s always the pedestrian’s fault. a natural extension of this kind of thinking is to simply ban pedestrians (and people biking) from areas where they could put themselves at risk. after all, if AV technology is “safer” than walking or rolling, from a vision zero perspective, we should discourage walking and rolling.

This. Absolutely this.

The (still theoretical) greater safety of an AV world should not come at the cost of a dystopia where suburban arterials and city streets alike are off-limits for anyone foolish enough to intrude on them outside the protection of their two-ton shell. Sure, we’re sometimes promised visions of ubiquitous microtransit; parking lanes being reclaimed for greenery; and cars, bikes, and pedestrians engaging in an effortless and perfectly safe dance.

But it’ll take hard work and political engagement to make that happen. We could easily fall ass-backwards into exactly the scenario you describe.

Oh, right. Two interesting discussions of this possibility: https://www.citylab.com/transportation/2018/03/this-is-how-the-self-driving-dream-might-become-a-nightmare/556070/ and https://www.theatlantic.com/technology/archive/2016/06/robocars-only/488129/

Glad to see Wall-E mentioned in the first commentary. For those that haven’t seen it, picture yourself going for a walk in this environment:

https://youtu.be/s-kdRdzxdZQ?t=15s

Driving safely is so easy even a robot can do it! Except when those pesky cyclists and pedestrians ‘come out of nowhere’, that is.

I’m waiting for the first time an AV mistakes the gas for the brake and drives through a Plaid Pantry…

Remember HAL 9000!

I’m afraid I can’t do that, Dave.

Seems like robot cars are a menace, but then so are regular Uber/Lyft cars – with respect to their frequently observed behaviors. I would be interested to understand how Uber plan to program their robot cars to stop in order to pick up and drop off their passengers in urban areas. Perhaps they could be programmed to do this in a way that does not involve stopping in the bike lane, blocking traffic or creating a disruption, parking on sidewalks, failing to use signals, driving erratically and unpredictably, and being generally disrespectful to all others for the convenience of the Uber and their passenger. Their behavior has placed the little blinking dot on the screen above the importance of all else. If they can program both their human drivers and their future robot drivers to pull over in a safe location in dense areas, even if it involves their fare walking a block or two, I might be a little more receptive to their business.

If humans are so scary than who shall program these vehicles ?

As long as those folks aren’t practicing drunken or distracted programming…

Or worse… untraversed branch conditions!

I would not be so quick to jump to conclusions and start calling AVs “deadly products”. I don’t think anyone can make such claims without analyzing accidents per miles driven and investigating the behavior and limitations of the technology. Remember that human drivers are hitting other cars and pedestrians with some frequency. Do we have data showing that the current AVs have a higher accident rate than human drivers?

That said, I support the city’s careful approach. AV testing brings jobs but also brings risks, and that trade off needs to be examined.

John,

AVs are computerized vehicles that rely on code to prevent deaths to other road users. That’s a deadly product IMO.

But they do a much better job than humans at preventing deaths today and are bound to get even much better. AVs will lower fatalities. Are you against that?

Hi Bill,

To clarify: My outrage at this death is because Uber is shitty company (search for #deleteuber) that bullies its way into cities and acts like they can do anything they want. They launched a product that wasn’t ready and now someone has died. The lack of regulation in this part of the industry is really absurd and it speaks to the larger bias toward driving that our entire system suffers from.

As for the issue itself, I think it’s way too early to have an informed debate about whether or not AVs are safer than existing manually-driven cars. We really don’t know how AVs will work on a large scale because they aren’t used on a large scale yet.

Overall, I’m torn on the issue. I understand that computer algorithms are less error-prone than humans — but I also know humans control the robots and I know that the auto-industrial complex is NOT looking out for us. They only want money and they do not care about outcomes until it hurts their bottom line.

Of course I’m not against lowering fatalities! But I am against ceding control of roadways to the highest bidder and making our roads even less humane than they are now.

Do we know that? What is Uber’s fatality per 100,000 VMT rate? Oh, right. We don’t know, because they don’t share it.

We are surrounded by machines that rely on code to prevent deaths to users. Fly in a plane, ride in a train, take an elevator, drive a car – there are countless lines of code controlling those machines and if the code doesn’t work as intended, people die. Human drivers are no different, they are biological machines that rely on their own form of code to avoid killing others. Most of the time.

We don’t know how safe or dangerous the current AVs are. The data is out there but has yet to be reported on. Obviously they are not as safe as they will eventually be – otherwise they wouldn’t still be in the testing phase. It is quite reasonable for Portland to decide we don’t want to find out how safe they are, that we don’t want to be part of the development of AVs, that we are going to let other cities do the testing and prove the safety of AVs before we will allow them in our city.

However, I think it is important to be fact-based and analytical about the safety of AVs. Because here is what is going to happen. AVs will be developed to the point where they can replace human drivers; commercial drivers will try to keep AVs off the roads by using the same emotional “deadly product” rhetoric; they will do this to save their jobs even if replacing humans with AVs will save lives.

Heck, you relied on code to tell JM how much code we really rely on! 🙂

I agree. I think JM has grossly exaggerated the situation here. One might as well rail against food produced with modern tractors and harvesters because some of the food, improperly prepared, stored or consumed, will cause death and disease even though modern food production is doing much better than we could do with livestock-pulled plows.

Right now, the number one reason people give for not riding bikes (which may or may not be the actual reason) is fear of being run over by motorists. Anything that reduces that reality is a step forward, imo. Even the Zero Vision folks acknowledge that human errors will continue to be plentiful. Why not take steps to accelerate the removal of the most deadly source of road death, the drivers?

Yeah, your opinion of Uber and opinion on AV are two different things. I happen to agree with Jonathan’s take on Uber, but they are not the only company working on AV.

The author of this editorial has allowed his distaste for Uber, the company and its business plan, to cloud his judgement of autonomous vehicles and their future potential to at least partly ameliorate automotive-induced carnage. This is apparent in his characterizations, such as the one of Uber as “a private company (that tests) their deadly products on humans.”

Clearly there are many obstacles to be overcome and refinements to be made before AV technology attains an acceptable safety profile. It will never be 100% safe, just like no other form of travel is 100% safe or ever will be. Rather than demonize Uber’s AV program in such a melodramatic way, show a little journalistic balance (dare I say integrity?) and also point out how buses, trains, bikes, skateboards, escalators, elevators, roller skates, footwear and just about any other form and style of human conveyance has resulted in deaths many times over since they were introduced to the public.

Hyperbole of the magnitude employed in this op-ed is rarely conducive to a useful discussion on any subject, and the hypocrisy of singling out Uber when there are dozens of instances of other products and modern conveniences resulting in human disability or death is troubling to me, especially coming from this otherwise progressive blog.

The number of deaths from testing an experimental technology on people should be zero. It is a bad idea to base testing on the predicted future safety of AVs or compare to the rates of human caused fatalities now. The central question is how many deaths are acceptable during product development, not the eventual balance of deaths. A death this early in the process raises red flags for a lack of rigor from Uber.

This line of reasoning would prohibit essentially all medical trials.

Now I’m intrigued. Tell me about the medicines that we currently use where people died in phase I trials. Do you think that AV algorithms are being given the same level of scrutiny as new drugs?

“Do you think that AV algorithms are being given the same level of scrutiny as new drugs?”

Drugs and code are different; individual bio-chemistry comes into play with drugs.

FAA mandates DO-178C certification for aircraft, and SAE standards for automotive code still have less rigor. When you fly in a plane you have things like dual- and triple-dissimilar architectures in play, running code in parallel that’s been subject to static analysis, Modified Conditional Decision Coverage (MCDC) ensuring all machine instructions are justified and validated, and traceability of all features to testing, functional justification, and safety (and increasingly security) mitigating controls. It is substantially easier to get an automotive control system to market than avionics.

Now I’m intrigued. Tell me about the medicines that we currently use where people died in phase I trials. Do you think that AV algorithms are being given the same level of scrutiny as new drugs? Do you think the FDA or an IRB would approve a human safety study where a human death would be considered an acceptable outcome?

AVs can be tested and rolled out safely or they can be rushed to market in an unsafe manner. Vioxx is a good example of a drug that was harmful but continued to be sold by Merck while they sat on data indicating that it was harmful. Do you think that Uber’s AV branch is more ethical than Merck? Do you think that Uber will be conservative and risk averse in a competitive market with less stringent oversight and regulations?

If I say I have a drug that will cure lung cancer, but I am going to kill a couple dozen people while testing it, will you let me start testing it in people?

The line of reasoning that I suggested is the basis for medical trials.

Patient deaths during drug clinical trials are not frequent, but they do happen. Drug testing goes through multiple phases designed to assess safety and tolerability, including “test tube” testing, animal testing, healthy volunteer human testing starting with very low doses, then patient testing starting with low doses.

Despite those precautions, many drugs on the market today had one or more patients deaths during the clinical trials.

The deaths are not always due to an adverse effect of the drug. Often the deaths are due to the underlying disease; the drug failed to save a patient, or the patient received a placebo. But sometimes the deaths are due to adverse effects of the drug. Often, such deaths will cause the clinical trials to be halted. But when the disease is itself very lethal and otherwise untreatable, clinical trials may proceed even after deaths due to drug adverse effects.

For example, there is a new class of immunotherapy drug where several patients have died during the clinical trials, due to adverse effects of the drugs. However, patients with the disease have essentially 100% mortality, only a several month life expectancy, and there is no other treatment. So these trials proceeded despite the deaths and the doctors learned how to better manage the dose and adverse effects.

Correct. You would be hard pressed to find a drug that was approved for broad use and wasn’t scrapped after it caused severe toxicity or a fatality in healthy people. I don’t know of any, but I would be interested to know the names of examples. And yes, when people have diseases with very high mortality, high risk drugs can be used. But, this level of risk tolerance is not acceptable for experimenting with AVs in a lax regulatory environment. My point was in response to the “you have to break a few eggs to make an omelet” shrug that I have seen in response to this death. It is not a clean comparison by any means, but if I said that I would cure cancer one day saving millions of lives so people dying in testing is no big deal, I would be ignoring the massive ethical and regulatory framework thats in place to ensure safety in human studies. And, even when there are deaths due to high risk drug studies, its a huge deal and the company and its researchers involved are examined with tremendous scrutiny, unlike the response to Uber.

Bottom line is, the imagined near-perfect AV world that we fantasize about doesn’t justify taking short cuts or unnecessary risks when experimenting on people.

People agree to be in those medical trials and agreed to the risks. The equivalent here would be pulling people off the street and performing new risky medical proceedures on them against their will. People should be allowed tở opt out of being test subjects for risky product developments.

there is no judgement to be clouded when speaking of facts…

parsing your quoted sentence you come up with facts from the articles this references…

true, the wording was strong, but not incorrect…

uber is a private company… motor vehicles are deadly… and uber is testing their motor vehicle products on humans…

you came up with the AV bias on your own, as the quoted statement was clearly aimed at uber and not the industry at large (who so far hasn’t killed anyone)…

I’d say that as an assumption I’d guess uber is doing a terrible job of programming these things… as opposed to google who seems to really want to stand by their motto, especially with things like this that can kill you…

And meanwhile, using statistics for 2015 when human drivers killed over 6,000 pedestrians and bicyclists, likely at least 15 others were killed in the traditional manner and it wasn’t newsworthy. Granted, had the Uber AV not been on the road testing, it may have resulted in one less death yesterday. Or the car behind it may well have caused the death instead. It is one thing to demand safe protocols for testing AVs (the one in this case had a human driver, which doesn’t seem to have helped) and it is another to take a reactionary position and describe AVs as death machines marketed by people and companies with no sincere interest in safety.

If you like Portland’s approach and want it to remain legal, CALL YOUR REPRESENTATIVE. Seriously. There is an existing Senate bill, “AV START” or S. 1885, that would preempt states and cities from enforcing safety rules on self driving cars. (Only as-yet nonexistent federal rules would be allowed.)

See https://www.tomudall.senate.gov/news/press-releases/udall-senators-self-driving-car-bill-needs-stronger-safety-measures-consumer-protections

and

http://www.autonews.com/article/20180316/MOBILITY/180319765/lobbying-senate-holdouts-av-start-act

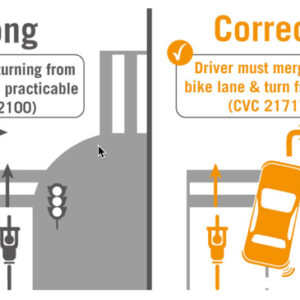

Unfortunately, our plethora of right turn auto Lanes that cross straight-through bike lanes ensures it will keep happening here. This insane design is at least as much at fault here as the driver.

So you’re suggesting the bike lane be built to the left of a right-turn-only lane (where space permits), and the driver crosses before the intersection? Or you’re suggesting cars merge with bikes to make the turn, like in California?

Genuinely curious, as whenever some of us describe techniques for safely navigating intersections we are accused of Forrester zealotry.

I didn’t know about the California rule, but yes, that seems like a much better practice. And I had thought this collision was a result of the car turning across the lane the “pedestrian” was cycling in, but apparently that wasn’t true.

That’s a contentious position here. Most Californian cyclists I know prefer the merge as opposed to the ‘right cross’ approach (where cars stay out of the bike lane and turn across it), but it’s what they know – I had to get used to it when I moved here. It works for people used to cycling with cars and have developed a sense of when to signal and assert position, but unfortunately that’s a terrible presumption for the mass population (and does nothing to get more people riding).

You can find arguments about the pros and cons of both approaches on this blog’s comment sections, but the bottom line is intersections are a challenge to the safety of both cyclists and pedestrians (especially with legal right turns on reds).

I would like to know how AVs will respond to all of our intersections without stop signs. What about those (worst idea ever) stop signs with a sign underneath them that permit turning right without stopping?

I actually encountered an AV dump truck type construction vehicle backing into a lot on the Going St bike route. It was weird and terrifying. It was pulling forward and backing up(adjusting the vehicle so it would back in correctly. I had looked up to make eye contact with the driver when I realized there wasn’t one. I had no way to know weather it would stop what it was doing or run me over.

I’ve ran into this myself. Turns out the vehicle was remote/radio controlled by the “driver”, who was standing alongside with the controller, so they could see where the vehicle was backing into…..

If this is going to be a thing we need easily recognized signals that the driver is not in the cab. Sometimes it seems that construction crews think the whole world is their jobsite, but if the jobsite overlaps the street the driver needs to be able to see passers-by and respect their right-of-way. For Portland, this is a work in progress. You could almost say that development in this town is an experiment on–nevermind.

Remote-controlled equipment like that has been around for a long, long time (and does not qualify as “autonomous”). True that it’s dangerous – I think the equipment designers envisioned its use in suburban developments where the buildings are surrounded by space and parking spots and not on-street in cities with multi-modal traffic flows.

It appears that the first look at the video from the vehicle makes it “likely” that there was nothing that the Uber could have done to avoid the crash.

https://arstechnica.com/cars/2018/03/police-chief-uber-self-driving-car-likely-not-at-fault-in-fatal-crash/

We don’t have access to the video, but I have some concerns about what has been reported.

The crash appears to have happened at this location (northbound Mill just south of Curry road): https://goo.gl/maps/UgcuyZXgDbB2

Note the pedestrian path on the left that dead ends onto this road.

The bike was laid down on the sidewalk where this stick is: https://goo.gl/maps/3uW39wMQrYA2

Considering that she was pushing an old mountain bike loaded up with shopping bags, I don’t think she darted into traffic as has been suggested (and is almost always suggested in pedestrian fatalities). Also, the woman was crossing from left to right when she was hit by the FRONT RIGHT of the vehicle, so she had almost made it past the vehicle when she was hit. The vehicle made NO ATTEMPT to stop.

Two other concerns: Why was an AV going faster than the speed limit? And, why are SUVs being used as AVs? These are two factors that are known to increase pedestrian deaths. If we are going to issue in a new era of safety, why not implement factors that we know would improve safety?

At this stage SUV’s are often used as AV’s because of the increased weight carrying capacity. The rooftop sensor array and the onboard computing equipment is heavier than many people realize. Also, the power needed to run the sensors,and computers for a modern AV, even with todays state of the art processors is 2500 watts or more. This requires special heavy duty alternators and inverters to create the power needed and this fits in an SUV easier than a car.

Sounds like a job for the humble minivan.

Path of the walker and where she was hit: https://ibb.co/eFQWuc

Per this TV report, with video of the site: https://www.abc57.com/…/tempe-pd-uber-self-driving…

The taillight on her bike is still blinking.

Corrected link: https://www.abc57.com/news/tempe-pd-uber-self-driving-vehicle-hits-kills-pedestrian

They just released the video. Based on what I saw the Uber should have seen the person via LIDAR sensors and taken some evasive action. The pedestrian was crossing illegally but the AV failed. I was willing to consider that the Uber did not have time to react but that is not what I saw on the video. The whole idea of LIDAR is being able to see things beyond human eyes.

I think the video and other data should be released. This is not a run of the mill accident. The public has an interest in seeing how this one happened.

I also question why an AV should be cruising above the speed limit. There are sometimes reasons to exceed the speed limit in passing and merging situations, but not to cruise above the speed limit.

That article also states that the AV was speeding, and blames the victim’s death on “shadows”.

What cutting age tech and programming is Uber running?

With infrared tech from 20 years ago I watch small watch animals wandering in total darkness… So tell me why the AV didn’t “see” the person???

Police are saying the AV was going 40 in a 35 zone. So, just like a human driver…

I support AV in principle. But if we’re going to have robots do the driving we need some Laws. Keep it simple, just a few will do. For example: An AV will not move through the space containing a vulnerable road user. If the future progress of a detected vulnerable road user crosses the track of an AV it must slow down enough to remain under control in all outcomes. If some part of the environment around an AV cannot be scanned by its detection systems that space must be assumed to contain a vulnerable road user on a crossing path.

That kind of AV I can live with. The problem with Uber is, they are the last bunch of people I’d want to be developing the AV of the future. Kind of like having your military in charge of developing nuclear power, alas.

A good reason to focus on accidents and the fatalities caused by AV’s at this early stage and apply great pressure in the form of laws and liability judgements is that the designer of an AV has much latitude ( or will once the tech is better understood) in how many sensors, what quality they are and the speed and integration of the processing in to the cars driving system. If the financial and legal penalties for accidents are low companies will go with the bare minimum to get by at the price of more accidents in iffy situations. If the penalties are high then future AV’s will have to choose the best and most in their designs. A good example of this is comparing Chinese and German CNC machine tools. European workers comp type laws are very strict so German machines have very high quality redundant safety equipment that is impossible to bypass, while current Chinese machines have the bare minimum to meet US standards and use low quality safety gear that breaks quickly and is easy to remove. The safety of future AV’s will depend on how hard we push now.

If this is indeed the first fatality (collateral damage) due to imposition of a unecessary tech it needs a memorial befitting its significance. A bronze sculpture honoring the first human slaughtered by this nonsense is just as culturally relevant as that of Vera Katz for a floating trail.

How will the federal AV START Act” (S. 1885) impact the cities policy? This bill is apparently likely to pass and is very broad in scope at preventing cities from interfering with AV testing in any way.

Why can’t Uber rent race circuits or the big 3 carmakers’ test tracks? If they’re going to be on public roads, I think it’s legit self defense for cyclists and pedestrians to vandalize, torch, or otherwise disables AV’s/

This was sent out yesterday…

NACTO Statement Re: Automated Vehicle Fatality in Tempe, AZ [March 19th 2018]

——————————————————————————-

Linda Bailey, Executive Director of the National Association of City Transportation Officials (NACTO), issued the following statement in response to a pedestrian being killed by an automated vehicle in Tempe, AZ:

Last night, an autonomous vehicle hit and killed a pedestrian in Tempe, AZ. We do not know much yet about this incident—which is believed to be the first time someone walking or biking was killed by a vehicle operating autonomously in the U.S.

NACTO is encouraged that the National Transportation Safety Board is sending a team to provide an in-depth, independent assessment of the tragic crash. However, what is already clear is that the current model for real-life testing of autonomous vehicles does not ensure everyone’s safety. While autonomous vehicles need to be tested in real-life situations, testing should be performed transparently, coordinated with local transportation officials, and have robust oversight by trusted authorities.

In order to be compatible with life on city streets, AV technology must be able to safely interact with people on bikes, on foot, or exiting a parked car on the street, in or out of the crosswalk, at any time of day or night. Cities need vehicles to meet a clear minimum standard for safe operations so the full benefits of this new technology are realized on our complex streets. Responsible companies should support a safety standard and call for others to meet one as well.

We cannot afford for companies’ race-to-market to become a race-to-the-bottom for safety…

https://nacto.org/2018/03/19/statement-on-automated-vehicle-fatality/?mc_cid=1655902416&mc_eid=8f31e4e041

In order to be compatible with life on city streets, AV technology must be able to safely interact with people on bikes, on foot, or exiting a parked car on the street, in or out of the crosswalk, at any time of day or night.

Thank you! I wish we could hold drivers to the same standard.

Dan, that is the “great promise” and “selling point” of the technology (for investors) and the streamlined regulatory stance that the DOTs (state and federal) are taking…

Uber is not the only one we should be concerned about here.

Google (Waymo) pulled off a great PR bait-and-switch on the American public, introducing driverless tech with their adorable and harmless looking two-seater (the “Firefly”), before killing it in 2016[1] and switching to the strategy of sticking their sensor arrays on the outside of much larger existing vehicles (granted, their minivan is probably half as deadly as the Uber SUV [2]).

Meanwhile, Google and Uber are part of the same industry lobbying group which successfully killed a bill in California which would have required vehicles smart enough to drive themselves to be smart enough to not run on oil. (Not surprising Google would oppose that; among other things, they’re now rolling out driverless semis [3].)

The same industry group is pushing a federal bill which seems designed to preempt such policies in any state (as well as, perhaps, these rules that Portland has developed [4]).

1: https://etfdailynews.com/2016/12/13/the-google-car-is-dead/

2: https://www.newscientist.com/article/dn4462-suvs-double-pedestrians-risk-of-death/

3: https://www.theverge.com/2018/3/9/17100518/waymo-self-driving-truck-google-atlanta

4: https://www.theverge.com/2018/3/16/17130190/av-start-legislation-lobbying-washington-feinstein

Looking at the aerial view – the AZDOT landscape architects and engineers who worked on the design of this highway have done almost 99% of creating an unmarked “crossing” at this location with high attraction for bike and peds: what with the nice paved “walking” areas in the shape of an “X” connecting two desire lines from the Canal and Loma trail to area destinations (theatre) and transit stop (EART) in the west…the state or city staff should be ashamed of the incomplete/ ineffective solution they relied on: just installing some small 18×12 R9-3 signs prohibiting the pedestrian crossing…

Once this case goes to the courts…this issue should not be that the cyclist should not have been on the roadway to begin with…what with the bike lane and no prohibition of bikes crossing the lanes here…but that the combined autonomous vehicle technology + professional driver were driving too fast and thus unable to adequately avoid this collision.

After looking at Streetview and aerial maps…My speculation, this cyclist’s route choice from the trail ends may have been to avoid either traveling under dark tunnels / under crossings (fear of crime) or the unprotected bike lanes on super large multilane intersections needed to avoid this shortcut to reach the far side transit stop…

Strictly speaking, per MUTCD Section 2B.51: the no pedestrian crossing sign (R9-3) would ONLY prohibit pedestrians and NOT bicycles from crossing the lanes here…otherwise the No Bicycles Sign (R5-6) should have been used to supplement the existing R9-3 sign. [Though again the use of these signs should NOT be the sole treatment at this location given the combined poor landscaping and engineering designs that makes this location so desirable and yet so hazardous for peds and bikes.]

https://mutcd.fhwa.dot.gov/htm/2009/part2/part2b.htm#section2B51

“ADOT further advocates that bicyclists have the right to operate in a legal manner on all roadways open to public travel, with the exception of fully controlled-access highways. Bicyclists may use fully controlled-access highways in Arizona except where specifically excluded by regulation and where posted signs give notice of a prohibition” – AZBikeLaw

Correction…ADOT

Everybody needs to read this article from Slate about the collision:

https://slate.com/technology/2018/03/uber-self-driving-cars-fatal-crash-raises-questions-about-our-infrastructures-readiness.html

It contains important details about the collision – a woman walking a bike across a poorly-lit eight-lane road – and echoes a lot of what Jonathan has been saying for years about the need to upgrade our infrastructure to include cyclists and walkers.

Video of collision, exterior and interior cameras.

https://youtu.be/XtTB8hTgHbM

My take:

1 – An average human driver with average attentiveness, vision and reflexes may well not have avoided the accident. To an average driver, the pedestrian really did come out of nowhere, doing something that was suicidal.

2 – A far above average human driver with extreme attentiveness, terrific night vision, and extremely fast reflexes might have avoided the accident. Think race car driver etc.

3 – An AV with all the sensors and high speed processing we expect them to have . . . I think it should have detected the pedestrian when she was still in the adjacent lane, and avoided the accident. Because #3 should be superior to #2 and far superior to #1, at least in this sort of pretty straightforward situation.

4 – There is a legal conudrum here. If the police and DA assess the Uber AV’s actions according to the standard applicable to #1, they might reasonably find no reason to take legal action. Is that the appropriate legal standard? Should we hold AVs to the legal standard of care applicable to the average human driver? Or to a higher standard? If the latter, what standard? I think it will take some time for the law to figure this out.

5 – The safety driver is obviously not a #2. He or she might well be a #1, though.

If this was a human driver which could only see as far as its headlights, he/she would be guilty of vastly outdriving their headlights. For the police to suggest that what can be seen from the video here is direct correlated to human eyesight is a fallacy. I can see considerably further in the dark than this video is able to show. There’s no way a person directly in front of my vehicle would be visible to me less than 2 seconds before I hit them, unless I’m going 50+ on the highway.

The driver was not looking at the road.

The now deceased person appears to come out of nowhere because the video camera is no where near as sensitive to light in low light settings.

Uber’s negligence led to this person’s death.

Here’s a good take on it.

https://www.forbes.com/sites/samabuelsamid/2018/03/21/uber-crash-tape-tells-very-different-story-from-police-report-time-for-some-regulations/#17f20e0248df

It’s important to remember how the pedestrian’s behavior was described to us:

Pushing a bicycle laden with plastic shopping bags, a woman abruptly walked from a center median into a lane of traffic and was struck by a self-driving Uber operating in autonomous mode.

“The driver said it was like a flash, the person walked out in front of them,” said Sylvia Moir, police chief in Tempe, Ariz., the location for the first pedestrian fatality involving a self-driving car. “His first alert to the collision was the sound of the collision.”

From viewing the videos, “it’s very clear it would have been difficult to avoid this collision in any kind of mode (autonomous or human-driven) based on how she came from the shadows right into the roadway,” Moir said.

“The pedestrian was outside of the crosswalk. As soon as she walked into the lane of traffic she was struck,” Tempe Police Sergeant Ronald Elcock told reporters at a news conference.

Does anybody really trust the police to conduct non-biased investigations into pedestrian fatalities? I sure don’t.

This is so frustrating to see play out. I have literally been telling anyone who will listen for many many years now that the Police and the media who parrot them are often extremely biased in these cases against the walker/biker and for the auto user. This Uber thing is no surprise at all. Fortunately in this case we have a good video. Imagine all the dead people who will forever be blamed for their own deaths by police/media/society. It makes me sick.

What a human driver should have seen and done isn’t really the issue.

The issue is that the AV sensors (radar, lidar, video with nightvision) are not limited by human vision or attention. They should have been able to detect the pedestrian entering the roadway, regardless of headlight coverage. I’m pretty certain they did. The AV thus should have braked or swerved.

https://mobile.nytimes.com/2018/03/23/technology/uber-self-driving-cars-arizona.html

Waymo (Google) AV cars averaging 5,600 miles between safety driver interventions. Uber cars averaging 13. Detail in article.

Recall Waymo sued Uber for theft of technology, settlement required Uber to not use any Waymo hardware or software in Uber’s AV cars. Maybe that set Uber back?

Uber basically needs to be first to AV cars, or the company will be gone. Once AV technology is working and proven, big companies will launch AV taxi fleets and the human driver Uber service won’t be able to compete. Would you take a ride in a Google (or GM, or Amazon) AV taxi that is purpose built (electric, compact, wide doors, seats facing each other, tons of headroom and legroom) for $5, or ride with an Uber driver in his random car for $10? Uber is already losing tons of money and burning through cash. Imagine how much worse it will be if half their business quickly disappears.

So the pressure to catch up with Waymo, who have a multi-year lead in AV, must be huge. Waymo is getting ready to street test AV cars with no safety drivers. Uber is obviously nowhere close. Is Uber getting desperate enough to cut corners?

A thought about safety drivers:

Humans are not capable of sitting passively for hours on end, staring out a windshield, and then suddenly detecting and reacting to an emergency in a couple of seconds. As AVs get better, the safety driver will become even less useful.

Imagine being the safety driver in a Waymo car, you have to sit there for almost 6K miles of driving (250 hours maybe) before something happens that you have to deal with. If that something is the car becoming confused by a situation (construction, accident ahead, etc) and stopping unable to proceed, so that a human is needed to take over and drive through the confusing location, you can do it. If that something is someone appearing in your headlights at night a couple seconds before impact, you won’t be able to do anything.

I expect the next generation of AV test car, that has no safety driver, will have a remote operator who can take over when the car is confused and stopped.

Here is an interesting article about the limitations of safety drivers

https://www.google.com/amp/s/www.wired.com/story/uber-crash-arizona-human-train-self-driving-cars/amp

Maker of the LIDAR sensor used by Uber:

“We are as baffled as anyone else,” Thoma Hall wrote in an email. “Certainly, our Lidar is capable of clearly imaging Elaine and her bicycle in this situation. However, our Lidar doesn’t make the decision to put on the brakes or get out of her way.”

More interesting news coming out of Arizona…as to Ubers recent cutbacks on the number of lidar sensors on its newest testing vehicles (Volvo has 1 vs. previous Ford’s 7) and the fail of AZ Governor’s Self Driving Oversight Committee, as it only had 1 ‘public’ meeting back in 2016 and then dropped off the radar (should have had at least 6 more quarterly oversight meetings to give guidance to on how to manage the on-road testing by DOT, Police, Insurance, AZ University Researchers, etc.):

https://www.abc15.com/news/region-phoenix-metro/central-phoenix/governors-office-arizonas-self-driving-oversight-committee-fulfilled-its-purpose